WGU D320 OA Study Guide I - 2025 | Key Insights into Cloud Security📖

Living our lives more and more reliant on technology, making sure the data is secure is as standard as locking the front door before heading to bed. But here’s the catch: the villains are no longer dumb, they cannot be outwitted, and neither should we be easily outsmarted. That is why; it is essential to have some understanding of aspects such as logging levels, data lifecycle, and the Zero Trust Network. If these terms sound like jargon straight from the tech echelon, don’t fret because we will help you understand everything.

- Different Logging Levels: Logging levels such as OFF, FATAL, ERROR, WARNING, INFO, DEBUG, TRACE, and ALL allow systems to control the verbosity of log output, helping developers and admins focus on critical issues while debugging or monitoring performance.

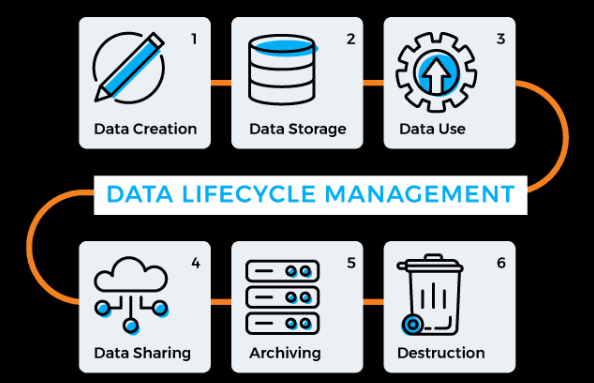

- The Data Lifecycle: The data lifecycle encompasses stages like creation, storage, usage, archiving, and deletion, ensuring that data is effectively managed, secured, and compliant with regulations throughout its lifespan.

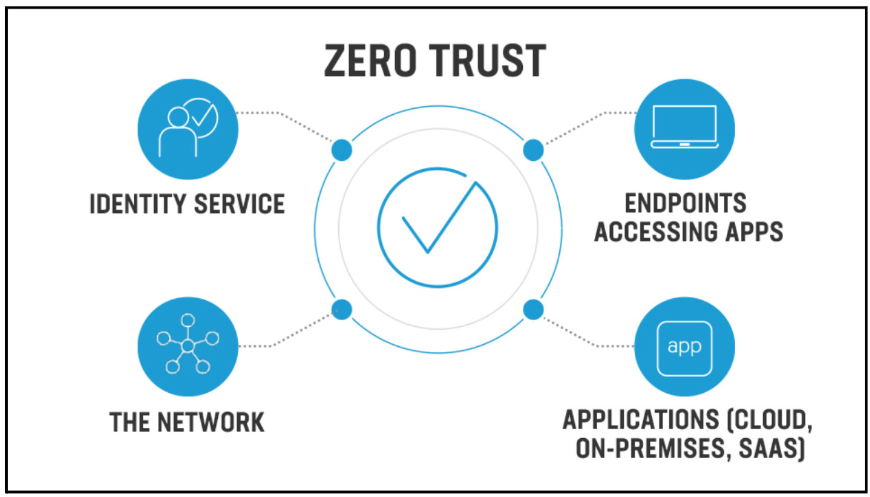

- Zero Trust Network: The Zero Trust model assumes no entity, inside or outside the network, is inherently trustworthy. It enforces strict identity verification and access controls, continuously monitoring for security threats.

Whether you’re here to ace your WGU D320 OA questions or simply want to stay ahead in the world of cybersecurity, this guide will equip you with essential knowledge. Let’s dive into the fascinating, ever-evolving world of cloud security and see why these concepts matter more than ever.

How to Use This Guide for the WGU D320 OA Exam?📖

The D320 Managing Cloud Security OA exam at WGU evaluates your understanding of cloud security principles, data management, and network security frameworks. This guide simplifies the key concepts of different logging levels (OFF/FATAL/ERROR/WARNING/INFO/DEBUG/TRACE/ALL), the data lifecycle, and the zero trust network to help you grasp the topics tested in the exam.

We also provide exam-style questions and practical applications to ensure you’re fully prepared for the questions on the WGU D320 OA exam.

Understanding Logging Levels in Managing Cloud Security For D320 OA📝

Logs are like a diary for computer systems. They record important events happening in applications or systems, much like a teacher keeps track of attendance and student progress. So with the application of logs, it is possible to find out what is happening inside the system, detect the causes of the failures, and potentially predict future problems. In the sphere of cloud security, logging is important as it assists one in monitoring the well-being and security of the application. Now, let’s have a closer look at what logging levels are and why they are so valuable.

What Are Logging Levels?

Imagine you’re playing a video game, and there are levels like “Easy,” “Medium,” and “Hard.” Logging levels are similar. They tell the computer how much information to record, depending on how detailed we want the logs to be. Logging levels are organized by severity—from the least critical to the most important. Let’s break them down step by step:

- OFF: This level means no logs are recorded at all. It’s like turning off your alarm clock completely. This is used when you don’t want any information recorded, maybe during maintenance.

- FATAL: This is for the most severe errors. Think of it like a fire alarm going off. A FATAL log means the system is in big trouble and might stop working entirely. For example, if the database crashes, a FATAL log will alert you.

- ERROR: These logs record major problems that don’t completely break the system. It’s like getting a flat tire—you can still drive slowly to the nearest repair shop, but it’s a serious issue.

- WARNING: These are like yellow traffic lights. They don’t stop the system from working, but they warn you to be cautious. For example, if your computer’s storage is nearly full, a WARNING log might let you know.

- INFO: This is general information about what’s happening, like a teacher announcing a quiz schedule. For example, a log might say, “The application started successfully.”

- DEBUG: These logs are super detailed and help developers understand what’s going on under the hood. It’s like a mechanic checking every part of a car engine to find a tiny problem.

- TRACE: This is the most detailed logging level, showing every single step an application takes. It’s like following someone’s footprints in the snow to see exactly where they went.

- ALL: This includes all logs, no matter how important or detailed they are. It’s like having a camera recording everything 24/7.

Why Are Logging Levels Important?

It is rather similar to packing for a trip — no one wants to overpack or underpack, but in fact, the best is when you have just the right amount of luggage. If you take too much, you end up carrying something that’s awkward and heavy such as a suitcase. If you take too little, you risk missing a key point that you will be required to discuss or answer in a test. Also, it is similar to overloading the system and wasting space if you log frequently, or underlogging in the sense that a crucial problem might escape your notice. Here’s why it matters:

- Security: Detailed logs like DEBUG or TRACE can accidentally store sensitive information, such as passwords. This can create security risks if the wrong people access the logs.

- Performance: Too many logs can make a system slower. Imagine writing down every word someone says—it’s hard to keep up!

- Prioritization: Logs help prioritize which problems need fixing first. For example, a FATAL error should get immediate attention, while a WARNING might be okay to monitor.

Examples of Logging Levels in Action

To make this more relatable, here are some everyday examples:

- OFF: During a quiet study session, you don’t record anything to avoid distractions.

- FATAL: Your car breaks down completely, and you need a tow truck. A FATAL log alerts you to a system failure.

- ERROR: Your phone freezes while typing a message. The system logs the error to understand what caused the problem.

- WARNING: A low-battery warning on your phone. It’s not critical yet, but you need to charge soon.

- INFO: A reminder that you’ve completed your daily exercise goal.

- DEBUG: A math teacher checks every calculation step to find an error in the solution.

- TRACE: A chef follows a recipe step-by-step, logging every ingredient and action.

- ALL: Recording every detail of a science experiment to ensure nothing is missed.

How to Apply Logging Levels

Developers use logging frameworks like Python’s logging module to set these levels. These frameworks act like control panels, allowing developers to decide how much information they want to capture. For example, in Python, you can use the logging module to specify the logging level and tailor messages for various situations. Here’s a simple example:

import logging

logging.basicConfig(level=logging.DEBUG)

logging.debug(‘This is a debug message.’)

logging.info(‘This is an info message.’)

logging.warning(‘This is a warning message.’)

logging.error(‘This is an error message.’)

logging.critical(‘This is a fatal message.’)

In this example, the system only records logs based on the level you set. If the level is DEBUG, it logs everything from DEBUG upwards.

Importance For D320 OA

Understanding logging levels is a key skill for managing cloud security effectively. By knowing what each level does and when to use it, you can keep systems safe, secure, and efficient. Whether you’re tackling a WGU D320 OA question or solving real-world problems, mastering this concept will give you a strong foundation in cloud security. Keep exploring, keep learning, and always stay curious—there’s so much more to uncover in the world of technology!

The Data Lifecycle: A Journey from Creation to Destruction For D320 OA📝

Data is like a story. Anything in the world can be divided into a beginning, a middle, and an end. As the way we work with information, data has several stages in its existence starting from its creation and ending with its deletion. This is known as the data lifecycle The data lifecycle is defined as the process that occurs when data is collected, processed, and stored for use in an organization. Comprehending these stages is very useful in governing cloud security, compliance, and protection of the data. Now, let us turn to a further analysis of the concept of data lifecycle and some reasons for focusing on it.

What Is the Data Lifecycle?

The data life cycle is basically the process that data undergoes from the time it is collected to the time it is disposed of. What some people don’t understand is that MACD strategy is like planting a plant. It germinates from a seed (data creation), nurtures its growth (data storage, usage, and sharing), and then determines the disposal of it right from the shelves when it’s no longer useful (archiving or deletion). They entail different approaches and measures on how the data has to be managed in every state.

The Stages of the Data Lifecycle

- Creation

- What Happens: This is the beginning. Data is generated, whether by creating a document, capturing a photo, or recording a transaction. Examples include typing a report, taking a picture, or making a bank transaction.

- Security Considerations: At this stage, data needs immediate protection. Encryption ensures that even if intercepted, the information remains unreadable to unauthorized users. Secure connections, such as SSL/TLS, guard data while it is being transmitted or generated.

- What Happens: This is the beginning. Data is generated, whether by creating a document, capturing a photo, or recording a transaction. Examples include typing a report, taking a picture, or making a bank transaction.

- Storage

- What Happens: Data is securely stored in databases, cloud services, or physical systems for future use. Examples include saving files to cloud storage like Google Drive or AWS.

- Security Considerations: Data that remains idle have to be encrypted. Data retrieval or modification is restricted to only users who have permission to access it by providing a password or even a multiple-factor identification. Data backup is also required on a regular basis, because of unpredictable situations when data may be lost.

- What Happens: Data is securely stored in databases, cloud services, or physical systems for future use. Examples include saving files to cloud storage like Google Drive or AWS.

- Usage

- What Happens: Data is actively accessed and used for tasks such as analysis, decision-making, or creating reports. For instance, analyzing sales data to predict trends.

- Security Considerations: This phase is the most delicate and exposes an organization to breaches. The enforcement of RBAC guarantees that users only get access to information that is relevant to their jobs among all accessible information. Logging results in a record that can be used to track unauthorized access while monitoring access provides real-time identification of such attempts.

- What Happens: Data is actively accessed and used for tasks such as analysis, decision-making, or creating reports. For instance, analyzing sales data to predict trends.

- Sharing

- What Happens: Data is shared between systems, organizations, or individuals. Examples include emailing a report, sharing files via a cloud platform, or integrating data across applications.

- Security Considerations: Encryption during transfer (using protocols like HTTPS) ensures data remains secure. Audit logs track who shared the data and with whom, providing accountability. Sensitive data should only be shared with verified recipients.

- What Happens: Data is shared between systems, organizations, or individuals. Examples include emailing a report, sharing files via a cloud platform, or integrating data across applications.

- Archiving

- What Happens: Data that is no longer actively used but still needed for compliance, historical reference, or auditing purposes is moved to long-term storage. Examples include keeping old tax records or project documents.

- Security Considerations: Archived data should remain encrypted and access-restricted. Periodic audits ensure that archived data remains intact and secure, while storage solutions like tape backups or specialized cloud services are used to retain data efficiently.

- What Happens: Data that is no longer actively used but still needed for compliance, historical reference, or auditing purposes is moved to long-term storage. Examples include keeping old tax records or project documents.

- Destruction

- What Happens: When data is no longer needed, it must be securely destroyed to prevent unauthorized recovery. For example, shredding hard drives or using secure delete software for digital files.

- Security Considerations: Data destruction must follow strict protocols to ensure it cannot be retrieved. Methods include degaussing magnetic storage, overwriting digital files multiple times, or physically destroying storage media. This step ensures compliance with regulations and minimizes data breach risks.

- What Happens: When data is no longer needed, it must be securely destroyed to prevent unauthorized recovery. For example, shredding hard drives or using secure delete software for digital files.

Why Is the Data Lifecycle Important?

- Security: At every stage, data is vulnerable to threats like hacking or accidental leaks. Applying appropriate security measures helps protect it.

- Compliance: Many laws, such as GDPR and HIPAA, require organizations to handle data responsibly. Following the data lifecycle ensures compliance.

- Efficiency: Managing data through its lifecycle reduces clutter and makes it easier to find what you need.

Best Practices for Managing the Data Lifecycle

- Understand Data Sensitivity: Classify data based on its importance and sensitivity. For example, personal data might need stricter controls than public data.

- Use Appropriate Tools: Tools like AWS S3, Azure Blob Storage, and data loss prevention software can help manage data effectively.

- Train Employees: Ensure staff understands the importance of data security and how to handle data properly.

- Monitor and Audit: Regular audits can catch security gaps or compliance issues before they become problems.

- Have a Response Plan: Be prepared to act quickly if data is breached or lost, minimizing damage and restoring security.

A Real-Life Example

Imagine a hospital managing patient records. Here’s how they handle the data lifecycle:

- Creation: A doctor records a new patient’s details.

- Storage: The data is saved in an encrypted database.

- Usage: Nurses access the data to provide care.

- Sharing: Patient details are shared with a specialist via a secure portal.

- Archiving: Older records are archived for legal compliance.

- Destruction: After the legal retention period, the records are securely deleted.

Importance For D320 OA

Understanding the data lifecycle is a cornerstone of managing cloud security. By knowing each stage and its security requirements, you can protect sensitive information, comply with regulations, and ensure efficient data management. Whether preparing for a WGU D320 OA question or real-world applications, mastering this concept is invaluable. The journey of data—from creation to destruction—is a story worth telling, right?

Tired of reading blog articles?

Let’s Watch Our Free WGU D320 Practice Questions Video Below!

Zero Trust Network: A New Era of Security For D320 OA📖

As the corporate world becomes more integrated, some of the conventional approaches to network security are inadequate. The threats coming through computer networks have changed, and the requirement for a tougher, nearly perfect protection system is more pressing today. Introducing the Zero Trust Network (ZTN), the new way to protect digital assets as well as the prerogatives based on the different perspectives of trust. So let’s discuss what makes Zero Trust so special and how it became a critical element in the local warfare of cybersecurity.

What Is a Zero Trust Network?

Zero Trust is an architectural model focused on a ‘never trust, always verify’ principle. In contrast with the prior approach, where everything inside the secured network perimeter is considered safe, the Zero Trust model mandates confirmation of all subjects and devices, alongside all access requests.

This model appeared in response to the new conditions in cybersecurity as a result of which internal and external networks are interconnected because of the work from home, use of the cloud, and portable devices. In the Zero Trust model, the organization does not assume that users inside the network are trustworthy while granting access to resources. This approach makes it very difficult for a hacker for instance to penetrate the organization’s network and then move from one area to the other without being blocked.

Core Principles of Zero Trust:

- Never Trust, Always Verify: Assume that every access attempt could be a threat, whether internal or external. Verification involves checking user credentials, device security status, and context like location or behavior.

- Least Privilege Access: Minimize a user’s privileges to only that which is needed by the user or the device that the user is using. This lowers the exposure of vulnerable information and limits the impact in case an auditor is compromised.

Zero Trust is not a single technology, but rather it is a set of measures implemented and supported by technologies. These are; MFA, micro-segmentation, continuous monitoring as well and dynamic policy enforcement, thus being a toolbox approach for protecting digital assets.

Core Principles of Zero Trust:

- Never Trust, Always Verify: Suppose that each access attempt could be a threat, both internal and external.

- Least Privilege Access: Restrict functions to what is needed for a user or device to do; in this way, more is not lost in case of a breakout.

Key Components of a Zero Trust Network

- Identity and Access Management (IAM)

- Provides the guarantee that only genuine and accredited users have the ability to access particular items.

- Items like multiple-factor authentication (MFA) and single sign-on (SSO) enhance this segment.

- Micro-Segmentation

- Divide the network into smaller, isolated segments.

- Prevents attackers from moving freely within the network if one segment is breached.

- Continuous Monitoring and Analytics

- Tracks user behavior and device health in real-time to detect and respond to anomalies.

- Tracks user behavior and device health in real-time to detect and respond to anomalies.

- Policy Enforcement Points (PEP)

- Act as gatekeeper, evaluating access requests based on predefined security policies.

- Act as gatekeeper, evaluating access requests based on predefined security policies.

- Encryption and Data Protection

- Encrypts data both in transit and at rest, ensuring that sensitive information remains secure.

- Encrypts data both in transit and at rest, ensuring that sensitive information remains secure.

- Dynamic Policies

- Adjust security measures based on context, such as user behavior, location, and device status.

How Does Zero Trust Differ from Traditional Security?

Traditional security models rely on a perimeter-based approach, often described as a “castle-and-moat” design. Once inside the perimeter, users and devices are often trusted implicitly. Zero Trust, on the other hand, eliminates this assumption and focuses on strict verification for every access attempt.

Key Differences:

- Perimeter Focus: Traditional security secures the boundary; Zero Trust secures every access point.

- Access Control: Traditional models grant broad access within the network; Zero Trust uses least privilege access.

- Threat Response: Zero Trust continuously monitors and restricts attacker movement, whereas traditional models often lack internal controls.

Implementing a Zero Trust Network

- Start with Identity Verification:

- Implement MFA to verify user identities rigorously.

- Authenticate devices to ensure they meet security standards.

- Segment Your Network:

- Use micro-segmentation to isolate critical assets and limit lateral movement.

- Use micro-segmentation to isolate critical assets and limit lateral movement.

- Adopt Continuous Monitoring:

- Deploy tools to track user activities and detect unusual behaviors in real-time.

- Deploy tools to track user activities and detect unusual behaviors in real-time.

- Secure Your Data:

- Encrypt sensitive information to prevent unauthorized access during transfer or storage.

- Encrypt sensitive information to prevent unauthorized access during transfer or storage.

- Establish Dynamic Policies:

- Create adaptable policies that consider user behavior, device health, and context.

- Create adaptable policies that consider user behavior, device health, and context.

Walkthrough: YouTube Video

Benefits of a Zero Trust Network

- Enhanced Security: Minimizes risks by verifying every access request.

- Regulatory Compliance: Meets requirements for data protection laws like GDPR and HIPAA.

- Scalability: Adapts to cloud environments, remote work, and modern digital infrastructures.

Real-World Applications of Zero Trust

Most enterprises, be they of the technology type or healthcare organizations, among others, are adopting Zero Trust. For example:

- A Financial Institution: Launched micro-segmentation and IAM in order to safeguard customer data from threats both from within the organization and from the outside.

- A Healthcare Provider: Applied Zero Trust for the patient record data to meet the HIPAA standards of healthcare.

Importance For D320 OA

Zero Trust has stopped being a hype and is instead the way forward in the cybersecurity paradigm. When one moves from trusting implicitly to trusting continually, there is a better chance of guarding the assets of an organization as threats evolve. Whether preparing for WGU D320 OA questions or securing a real-world network, understanding Zero Trust principles is an invaluable skill in today’s digital age.

Wrapping Up: Your Key to WGU D320 Success📖

Understanding logging levels, the data lifecycle, and Zero Trust Network is not just about passing an exam—it’s about building a solid foundation for navigating the world of cloud security. These concepts are practical tools that organizations rely on every day.

As you prepare yourself for the final OA of WGU D320 understand these topics and be able to provide examples and show their real-world use. Cybersecurity is not only a topic; it is a discipline that will set you up for success in today’s world.

Good luck with your WGU D320 OA! Stay determined, stay curious, and keep learning. You’ve got this!